The Great vSwitch Debate – Part 3

OK…in Part 1 of this series, we introduced the concept of a vSwitch and touched on some of the options available. In Part 2, we talked about some of the security features available in the vSwitch. In this Part 3, we’re going to talk about the load balancing features that are available in the vSwitch.

In a vSwitch, load balancing policies describe the different techniques that will be used for distributing the network traffic from all the virtual machines that are connected to the vSwitch and its subordinate Port Groups across the physical NICs associated with the vSwitch. There are several options available for load balancing as shown below:

- Load Balancing Policies

- vSwitch Port Based (default)

- MAC Address Based

- IP Hash Based

- Explicit Failover Order

Something to keep in mind is that all of these load balancing policies we’re going to discuss here affect only traffic that is outbound from your ESX host. We have no control over the traffic that is being sent to us from the physical switch. Also, all of these techniques apply to connections between the vSwitch uplink ports (i.e. the physical network adapters affiliated with a virtual switch) and the physical switch. Additionally, these load balancing policies have no effect on the connections between the virtual network adapter in a virtual machine and the vSwitch. Figure 1 shows the scope of discussion for this installment of our series on vSwitches.

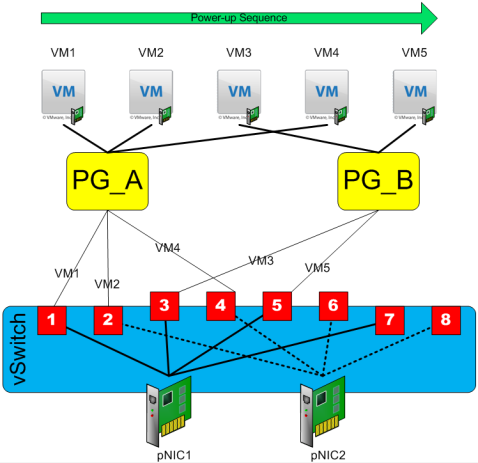

To help illustrate the load balancing concepts, we’re going to work with the configuration shown in Figure 2. In this graphic, we have a single vSwitch that is connected to two pNICs. We’ve also configured two Port Groups (PG_A and PG_B) on the vSwitch. For purposes of discussion, our vSwitch has eight ports configured (an impossible configuration in real life!).

We’ll use Figure 2 as the backdrop for all our discussions going forward. On an editorial note – in all my examples, I am assuming that the load balancing approach is set at the vSwitch level and is not overridden at the Port Group. If anyone has a really good example of why I would want to override the load balancing approach at the Port Group, please leave me a comment!

In all load balancing scenarios, the affiliations that are made between a vNIC and a pNIC are persistent for the life of the vNIC or until a failover event occurs (which we’ll cover a little later). What this means is that when a vNIC gets mapped to a pNIC, all outbound traffic from that vNIC will traverse the same pNIC until something (i.e. vNIC power cycle, vNIC disconnect/connect, or a detected path failure) happens to change the mapping.

Note: Each pNIC can be associated with only one vSwitch. If you want a pNIC to be affiliated with more than one network, you will need to use 802.1Q VLAN Tagging and Port Groups!

Now, on to the load balancing approaches!

vSwitch Port Based Load Balancing

The first load balancing approach I want to discuss is vSwitch Port Based (I’ll refer to this as simply “Port Based” load balancing), which is the default option. In the interest of full disclosure, let me say that this is my favorite type of load balancing. I tend to use this except in situations which can truly benefit from IP Hash.

In Port Based load balancing, each port on the vSwitch is “hard wired” to a particular pNIC. When a vNIC is initially powered on, it will be dynamically connected to the “next available” vSwitch port. See Figure 3 for the first example.

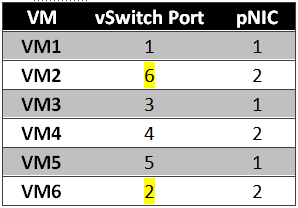

In the example shown in Figure 3 the virtual machines are powered up in order from VM1 to VM5. You’ll notice that VM3 is connected to PG_B, yet it still winds up affiliated with vSwitch port #3 and pNIC #1. This is because, as you’ll recall from Part 1, a Port Group is merely a template for a vSwitch connection rather than an actual group of ports. So, what we wind up with after this initial power-up sequence is shown in Table 1:

In Scenario 2, we’re building on the configuration presented in Scenario 1. In this second scenario, the following events have occurred since the end of Scenario 1:

- VM2 was powered off

- VM6 was powered on

- VM2 was powered back on

The result is shown in Figure 4.

Notice that vSwitch port #2 is now connected to VM6, yet it retains its association with pNIC2; whereas VM2 is now connected to vSwitch port #6, also on pNIC2. We wind up with the configuration represented in Table 2.

MAC Address Based Load Balancing

MAC Address Based load balancing, which I’ll call “MAC Based,” simply uses the least significant byte (LSB) of the source MAC address (the MAC address of the vNIC) modulo the number of active pNICs in the vSwitch to derive an index into the pNIC array. So, basically what this means in our scenario with two pNICs is this:

Assume the vNIC MAC address is 00:50:56:00:00:0B, therefore, the LSB is 0x0B or 11 decimal. To calculate the modulo, you divide (using integer division) the MAC LSB by the number of pNICs (thus 11 div 2) and take the remainder (1 in this case) as the modulo. The array of pNICs is zero-based, so (modulo 0) = pNIC1 and (modulo 1) = pNIC2.

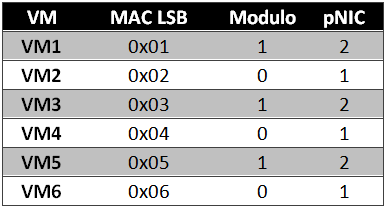

If we look at a scenario where we have six VMs with sequential MAC addresses (at least the LSB is sequential), we wind up with a situation like the one shown in Figure 5.

Notice that I removed the vSwitch ports from this diagram. That’s because they really don’t come into consideration with MAC based load balancing. What we wind up with is VM to pNIC mapping as shown in Table 3. The MAC LSB column shows the least significant byte of the MAC address for the vNIC in each VM. The modulo value shows the remainder of (MAC LSB div (# pNICs)), and the pNIC column indicates to which pNIC the vNIC will be affiliated.

As you can see, there is no real advantage to using this over vSwitch Port Based load balancing, in fact, you could potentially wind up with a worse distribution with MAC based load balancing. So…even though this is an option, I see no real justification for taking the extra steps to configure MAC based load balancing. This was the default load balancing approach used in ESX 2.x. I file this one in the “interesting but worthless” category.

IP Hash Based Load Balancing

IP Hash based load balancing (I’ll call it simply “IP Hash”) is the most complex load balancing algorithm available, it also has the potential to achieve the most effective load balancing of all the algorithms. The problems with this algorithm, from my perspective, are the technical complexity and the political complexity. We’ll discuss each as we go along.

In general, IP Hash works by creating an association with a pNIC based on an IP “conversation”. What constitutes a conversation, you ask? Well, a conversation is identified by creating a hash between the source and destination IP address in an IP packet. OK, so what’s the hash? It’s a simple hash (for speed) – basically (((LSB(SrcIP) xor LSB(DestIP)) mod (# pNICs)) which all boils down to: Take an exclusive OR of the Least Significant Byte (LSB) of the source and destination IP addresses and then compute the modulo over the number of pNICs. It’s actually not that different than the calculation used in the MAC based approach.

When configuring IP Hash as your load balancing algorithm, you should make the configuration setting on the vSwitch itself and you should not override the load balancing algorithm at the Port Group level. In other words, ALL devices connected to a vSwitch configured with IP Hash load balancing must use IP Hash load balancing.

A technical requirement for using IP Hash is that your physical switch must support 802.3ad static link aggregation. Frequently, this means that you have to connect all the pNICs in the vSwitch to the same pSwitch. Some high-end switches support aggregated links across pSwitches, but many do not. Check with your switch vendor to find out. If you do have to terminate all pNICs into a single pSwitch, you have introduced a single point of failure into your architecture.

It is also important for you to know that the vSwitch does not support the use of dynamic link aggregation protocols (i.e. PaGP/LACP are not supported). Additionally, you’ll want to disable Spanning Tree protocol negotiation and enable portfast and trunkfast on the pSwitch ports.

All this brings up the political complexity associated with IP Hash – the virtualization administrator can’t make all the configuration changes alone. You have to involve the network support team, which in many organizations, isn’t worth any possible performance improvement!

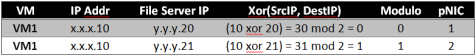

So, let’s assume that you have one VM (one single IP address) copying files between two file servers (two unique IP addresses) See Table 4:

As you can see, we now have one VM taking advantage of two pNICs. There are obvious performance advantages to this approach! But, what happens if the two file servers have IP addresses that compute out to the same hash value, as shown in Table 5?

In this example, both conversations map to the same pNIC, which kind of defeats the purpose for implementing IP Hash in the first place! What it all boils down to is this:

To derive maximum value from the IP Hash load balancing algorithm, you need to have a source with a wide variety of destinations.

Where most people want to use IP Hash is for supporting IP Storage on ESX/i (remember, that’s my notation for either ESX or ESXi). Since there is a single source IP address (the IP address of the vmkernel), you need to have multiple destination IP addresses to be able to take advantage of the load balancing features of IP Hash. In many IP Storage configurations, this is not the case. NFS is the primary culprit – it is very common to have a single NFS server sharing out multiple mount points, which all share the NFS server’s IP address. Many iSCSI environments suffer from the same problem – all the iSCSI LUNs frequently live behind the same iSCSI Target, thus a single IP address.

The lesson to this story is really quite simple:

If you want to use IP Hash to increase the effective bandwidth between your ESX/i host and your IP Storage subsystem, you must configure multiple IP addresses on your IP Storage. For NFS, this means either multiple NFS servers or a single server with multiple aliases, and for iSCSI, it means that you’ll want to configure multiple targets with a variety of IP addresses.

So, as you can see, the IP Hash load balancing algorithm offers the best (under the right set of circumstances) and the worst of all options. It offers the best load balancing and performance under the following circumstances:

- IP Hash load balancing configured on vSwitch with multiple uplinks

- Static 802.3ad configured on all relevant ports on the pSwitch(es)

- Multiple IP conversations between the source and destinations with varying IP addresses

If you don’t meet ALL those requirements, IP Hash gains you nothing but complexity. IP Hash gains you the worst of all options because of the following:

- Significantly increased technical complexity

- Significantly increased political complexity

- Potential introduction of a single point of failure

- No performance gains if there is a single IP conversation

The long and short of it comes down to this – use IP Hash load balancing when you understand what you’re doing and you KNOW that it will provide you concrete advantages. This is not the load balancing algorithm for the new VI administrator, nor for an administrator who is not on good terms with their network support team. My recommendation for most environments is to start with vSwitch Port Based load balancing and monitor your environment. If you see that your network throughput is causing a problem and you can satisfy the conditions I set out above, then – and only then – implement IP Hash as your load balancing algorithm.

Explicit Failover Order Load Balancing

This is the load balancing algorithm for the control freak in the crowd. With the Explicit Failover Order load balancing algorithm in effect, you are essentially not load balancing at all! Explicit failover will utilize, for all traffic, the “highest order” uplink from the list of Active pNICs that passes the “I’m alive” test. What does the “highest order” mean? Well, it’s simply the pNIC that has been up the longest!

You manage the failover order by placing pNICs into the “Active Adapters,” “Standby Adapters,” and “Unused Adapters” section of the “Failover Order” configuration for the vSwitch or Port Group. pNICs listed in the “Active Adapters” section are considered when calculating the highest order pNIC. If all of the pNICs in the Active Adapters section fail the “I’m alive” test, then the pNICs listed in the “Standby Adapters” section are evaluated. Adapters listed in the “Unused Adapters” section are never considered for use with the Explicit Failover Order load balancing approach.

This is another policy that I file in the “interesting but worthless” category.

Load Balancing and 802.1Q VLAN Tagging

It’s important to note that, for all of the load balancing options I’ve discussed, you can still use 802.1Q VLAN Tagging. The thing you have to be careful of is to ensure that all ports configured in a load balanced team have the same VLAN trunking configuration on the pSwitch. Failure to configure all the pSwitch ports correctly can result in very difficult to troubleshoot traffic isolation problems – it’s a good way to go bald in a hurry!

Load Balancing Summary

To summarize on network load balancing options…even though there are four load balancing options available for your use, I recommend that you stick with one of two:

- vSwitch Port Based Load Balancing: This is the default (and preferred) load balancing policy. With zero effort on the part of the virtualization administrator, you achieve load balancing that is – in most cases – good enough to meet the demands of the majority of virtual environments. This is where I recommend that you begin, especially if you are new to VMware technologies. Stand this configuration up in your environment and monitor to see if the network is a bottleneck. If it is, then look to IP Hash as a possible enhancement for your setup.

- IP Hash Load Balancing: This is the most complex, and possibly, the most rewarding load balancing option available. If you’re comfortable working in your virtual infrastructure, if you understand the networking technologies involved, and if you have a good working relationship with your network administrator, IP Hash can yield significant performance benefits. The problem I have with this algorithm is that I see it implemented in far too many environments where network throughput is not a problem. People seem to think that a gigabit (or even a 10Gb) Ethernet connection just doesn’t have enough guts to handle 20, 30, or more virtual machines. I beg to differ! In most cases, you’ll find that a single GbE connection is more than capable of handling the load, so why not let it? The area where I do sometimes see a need for IP Hash is with IP based storage, but even here, it is frequently not needed.

Do yourself a favor – if you don’t need to use IP Hash, and especially if your environment isn’t setup to be able to take advantage of the benefits of IP Hash, KISS it and stay with vSwitch Port Based Load Balancing. You’ll be glad you did!

81 responses to “The Great vSwitch Debate – Part 3”

Trackbacks / Pingbacks

- - April 5, 2009

- - April 7, 2009

- - April 9, 2009

- - April 26, 2009

- - May 1, 2009

- - June 8, 2009

- - November 13, 2009

- - January 22, 2010

- - April 5, 2010

- - December 6, 2011

- - January 31, 2012

- - April 9, 2012

- - November 25, 2012

- - January 18, 2013

- - January 30, 2013

- - May 21, 2013

- - April 2, 2014

- - April 2, 2014

- - June 19, 2015

Ken:

Your blog is absolutely fantastic.

/Hoff

Thanks, Chris…hope I can keep it that way!

Great work, Ken.

Ken,

>If anyone has a really good example of why I would want to override the

>load balancing approach at the Port Group, please leave me a comment!

I am not in front of a vCenter Console so it’s off the top of my head but…. isn’t it true that if you create two PGs that override the default vSwitch policies you can configure both PGs to have “reverse” Active/Passive Load Balancing (i.e. Failover) so that PG1 uses NIC1 and keep NIC2 in standby whereas PG2 uses NIC2 and keep NIC1 in standby? This is useful if you want to segment traffic in NIC constrained configurations (i.e. 2 NICs supporting both VM traffic as well as VMotion / or whatever).

As I said just an idea…

Massimo.

Yes, Massimo, that is true, but I question if the gain is worth the pain. If you’re running GbE, chances are good you’re not bandwidth constrained to begin with, so what are you really gaining by adding the complexity? Yes, you can deterministically say you know what traffic is using which interface (until there’s a failure), but do you really care?

I suppose there are environments where you really need to separate traffic – and NIC constraints would be a motivator, but I would advocate starting with the defaults and implementing the PG overrides ONLY if there was a real problem to solve.

I agree Ken.

I was just thinking about situations where it’s not worth having very many NICs but yet it would make sense to separate traffic that might have potentially high bursts (i.e. VMotion) from something that needs to be somewhat predictable (i.e. VMs).

Is this for everyone? Not at all. I agree we have enough CPU / Memeory and Network bandwidth today that half would be sufficient.

Massimo.

Fantastic trio of articles. I have been considering IP Hash load balancing my IP storage for a while and feel you’ve saved me some pain

Great Blog…

Thanks Steven!

Glad to have saved you the trouble. That’s what I’m hoping to do with this series … convince people that the simple way is usually the best way.

KLC

Ken,

One concept that is still unclear to me is how load balancing work on the IP Storage vSwitch/PortGroup. Your examples deal solely with how the vNICs of various VMs are assigned to pNICs, but how does that translate to IP Storage?

For example, I’m planning on building an ESX server (my first) with 12 pNICs and using 3 pNICs for my NFS storage traffic. How are connections to the pNICs made in that scenario?

Your blog is awesome so far!! Thanks!!

Hi Cameron,

The vSwitch doesn’t care whether it is a VM or a vmkernel device connecting to it. The vSwitch is a layer 2 device that passes Ethernet frames, nothing more, nothing less. All of the concepts I discussed apply equally to virtual machines, VMotion, and IP storage (NFS / iSCSI).

Thanks, and glad you’re enjoying the articles.

KLC

Ken,

I understand that the concepts are the same. What I don’t understand is how ESX connects IP storage processes to the pNICs. Specifically, I’m trying to understand how the load would be distributed across multiple pNICs when pointing to a single NFS host with one NFS volume. Does ESX make a separate connection for each VMDK/File? Or only one for each NFS volume?

I’ve only deployed a single-pNIC ESXi host so far, so perhaps I’m misunderstanding how the vmkernel ports are supposed to work.

Hi Cameron,

For a single NFS server presenting a single share (or any number of shares on the same IP address), all traffic will traverse a single pNIC, regardless of load balancing options. The only way to have your traffic spread across multiple pNICs is to use IP Hash load balancing with multiple src/dest IP address pairs.

“Connections,” in the TCP/IP sense, don’t matter for load balancing. It’s all about “Conversations” with IP Hash. A conversation is comprised of a source IP address and a destination IP address. It doesn’t matter how many connections are present in the conversation, all traffic will use a single pNIC.

Hope this helps!

KLC

There are only five VMs in figure 3. This text is a little confusing:

“In the example shown in Figure 3 the virtual machines are powered up in order from VM1 to VM6.”

For IP Hash Based Load Balancing, must you be running IPv4 — what happens if you have IPv6 traffic or some other protocol?

Thanks for this series of articles.

Thanks for catching my counting mistake – it’s fixed.

If you’re using a protocol other than IPv4, then whatever values happen to be at the “standard IPv4 address” offset within the datagram are used as if they were IP addresses.

Good question! Thanks for visiting…

KLC

Hi,

Something is confusing me. You tell in chapter 6 that the links between the vNic of the vSwitch and the pNIC is static. What I do not understand however is how are those relationship defined. Is it an even/odd relationship ? But then it is not applied on the figure 5 of the sixth chapter…

The whole point is that I’m trying to understand why the vNic 6 is mapped to the first pNIC and not the the second one…

Should I have commented on the chapter 6 ? Well the bottom line is about the vNIC/pNIC relationship in the port base load balancing scenario, right ?

Thanks a lot, this bunch of article where very useful for me as an introduction to vSwitch. Being a total new comer in the ESX universe, I can state that you really made you point clearly.

Clement.

The mapping between vNIC and vSwitch(Port Group) is static for the current vNIC invocation (i.e. from the time the vNIC is powered on until it is powered off). Once a vNIC is powered off, it leaves an “available” vSwitch port, so the next time a vNIC is powered on, it is assigned to the first available vSwitch port.

I wouldn’t worry too much about it – it’s one of those academic exercises that, in the end, doesn’t really make much difference. In most cases, your host will be up for a long time and VMs will be powered on and off many, many times which will cycle the vNICs across the vSwitch ports so many times that it almost becomes random.

Thanks for stopping by – and sorry it took so long for me to reply!

KLC

Hi Ken,

a)Shapo on the lesson..bravo!

b)You’ve mentioned in fig. 1 that no policy applied “here”(The vmkernel is there)….but then in the end you recomend ‘ I do sometimes see a need for IP Hash is with IP based storage’..what have I missed..?!

c)Where on Earth have you got all that XOR,LSB,,,algorithm information from..? (It’s almost as if you’ve written them by yourself) A M A Z I N G!

Ken – We will be using IP Hash Load Balancing on our two pNic Etherchannel which wil be carrying our “service console”, “vmotion”, and “fault tolerance logging” ports. would you agree this being the preferred way?

Without knowing more about the details of your environment, I would probably stick to the default vSwitch Port ID based load balancing algorithm. I would even consider creating multiple port groups and having the SC & VMotion share one port group with pNIC1 as primary and pNIC2 as standby and have FT on the other PG with the primary/standby reversed.

FT & VMotion are both (potentially) heavy bandwidth users and I would like to keep them separated as much as possible. IP Hash will not give me the guaranteed separation I can get with the approach mentioned above.

Something to consider…

KLC

I still confused on the pSwitch configuraiton. If i am using the default vSwitch Port Based Load Balancing, Do i still configure an 802.3ad (static) port-group on my pSwitch? Why or why not? Keep in mind I’m running this on Cisco 3750 switches which can do cross switch 802.3ad.

No, you do not configure 802.3ad on your pSwitch if you are using the default vSwitch Port Based LB. You don’t need to make any special configuration changes on your pSwitch – that’s one of the great things about the default policy! When you configure .3ad, your pSwitch is going to expect all ports in the port group to act as one single port. This configuration used to work back in the ESX 2.x days (and it may still work now…), but it is not recommended and is not supported (by either Cisco or VMware).

HTH,

KLC

How does the switch then deal with the mac-address going down different physical ports during the load balancing?

Hi Ryan,

The MAC address will not “float” between the various ports in the port group. Once a vNIC has established an affiliation with a pNIC, ALL outbound traffic for that vNIC will traverse the same pNIC (the only exception is IP Hash, where the affiliation is tied to the IP conversation rather than the vNIC). The only time that the vNIC’s traffic will change pNICs is in the event of a path failure – in which case the vSwitch will send a gratuitous ARP packet to the pSwitch to notify it of the change in interface.

Thanks!

KLC

I think your point about needing 802.3ad (etherchanneling) the pNICs is a good one when using IP Hashing. An important implication in this is that this virtually eliminates the possibility to use the IP Hashing when running ESX on blade servers, as *no* blade server switching technology that I’m aware of would allow an etherchannel across 2 switch modules. If you were using passthrough modules to allow each blade access to external switches directly, then you could connect those to some upstream stacked 3750s, 6500s running VSS or Nexus 7000s (and soon 5000s) using VPCs.

Just like the hashing on the network side using etherchannels, it’s important (as you said) to choose the hashing that’s best for what that box does. It’s if a server that dumps files to one other box, then IP hashing isnt buying anything. If it’s an application server that handles thousands of connections from a wide distribution of client IP addresses, IP hashing is probably the best option.

I’m surprised ESX hasn’t taken a tip from Cisco and added another level which is source/dest IP *AND* TCP port hashing. This allows even 2 machines that talk alot between them to have multiple conversations balanced properly over multiple links.

Do you know of a way to determine the vNIC to pNIC mapping for VM guests that are currently running?

Great information, by the way. Thank you very much.

Hi Ken,

first of all thanks for this gr8 article,i have a question which is related 802.3ad and link aggregation is it true that in all load balancing techinques in VI we still need to implment 802.3ad for inbound traffic load balancing?

Thanks

Yes…you will need to configure (static) link aggregation on both ends of the connection. If your VI is configured for IP Hash and your pSwitch is not configured for 802.3ad, then your pSwitch can get “confused” because the MAC address of the source VM can be seen on multiple ports (possible a different port for each TCP conversation).

KLC

Is there a way to check which vm is using which uplink port.

you can check mac address table on physical switch but i want to find it in vcenter or through cli.

thx in advance

Please comment the pros and cons of having say 1 vSwtich to do everything

and 2 vSwitches?

Is it more flexible to have 1 vSwitch? Can load balancing be done across 2 vSwtiches?

The primary advantage of using multiple vSwitches (2 or more) is physical separation. If you have, for example, a DMZ and a production network, chances are good that they are on different physical networks. This means that you would want to maintain that separation in the virtual world as well. That would mean different vSwitches.

As for load balancing across two vSwitches – nope.

HTH,

KLC

Hi Ken!

Great set of articles!

I’ll apologize now for the long winded description… 🙂

We have 2 Dell R900 servers running vSphere ESX 4.0u1 with 16 cores, 128GB RAM and 3 Quad Port Intel pNICs providing 12 GigE ports connected to 3 Dell PowerConnect 6248 switches linked via rear stacking modules pSW1 – pSW2, pSW2 -> pSW3, pSW3 -> pSW1.

Backend storage via EMC CX700 FC SAN, LUN hosting VM’s is an 8 drive RAID6 (1.3TB) array.

I have read numerous articles from VMware and others then tested most of those network configurations, some blew up still I am not convinced the network performance is what it should/could be…

The current configuration;

R900 with 6 GigE ports across 2 pNICs (3 ports/NIC), 2 ports -> switch1, 2 ports -> switch2, 2 ports to switch3.

25 test VM’s (Win7 Enterprise 2 vCPU, 2GB RAM) have 1 vNIC (vmxnet3 10Gbps) connected to 1 vSW with 1 port group (64 ports). vSW has 6 pNICs set active (non marked fail-over/standby) with IP Hash routing enabled.

6248 pSWs have SpanningTree PortFast enabled (remember these are Dell’s…)

My desktop to VM and VM to desktop file copy avg. is 16MB/s.

My VM to VM file copy avg. is 20MB/s

I had expected a lot higher network throughput…

Any explanation or recommendations would be gratefully appreciated!

Thanks!

Bill

Great article.

If you have 1 VM communicating directly to 1 physical – say a backup server; does this mean no matter what load balancing profile you select you will never get more than 1 cards total throughput?

thanks

Yes…unless you have multiple IP addresses that you can talk to (such as multiple iSCSI targets)…look at the Nexus 1000v for true teaming

Ken,

Any idea if the IP Hashing algorithm changed at all in ESX 4? I’ve calculated the results using the formula provided and specified VM IP addresses such that half of my load should travel one of two 10GbE NICs and the half travel the other. However, I’m seeing roughly a 70/30 split.

Andy

Nope…I’ve not dug that deeply into v4, so I really don’t know for sure.

Hi ken, there is something I don’t understand how vSwitch handles the load balancing between pNICs in Port Base Load balancing, I know is “Port Base” load balancing, but I mean how esxi knows how to balancing vSwitch ports between pNICS

The load balancing algorithms are baked into the network stack of the vmkernel. The thing to remember is that (with the exception of IP Hash), the pNIC load balancing is done with vNIC granularity. This changes with the Nexus 1000v…

It’s really fairly simple: the vSwitch has logical “ports” associated with it. Each port has a static association with a particular pNIC. As vNICs are powered up, they form an association with a particular vSwitch logical port, which is further associated with a pNIC. The association between vNIC & vSwitch port is static until the vNIC is power cycled; the association between vSwitch port and pNIC is static until a power cycle or a pNIC path failure.

Great Blog! Understandable written but still with the necessary technical background.

Thanks for stopping by – and thanks for the kudos. Much appreciated!

Hi Ken,

Great Article!

can you advise me how to configure following vSwitch/Network Setup?

we have 10GB pSwitchs, ESX Hosts up to (30) with 2x10GB Network Interfaces and shared iScisi Storage

Which vSwitch Configuration would you prefer to implement if only 2x 10GB network interfaces available?

We are using 1 single vSwitch 2 10GB Uplinks and default Settings (see Setup1.)

Regarding Sphere Best practices 4.0 you should seperate/prioritize the service console if possible and i know additional interfaces 🙂

vSwitch Setups:

1.) Configuration after default Installation:

vSwitch0 2x10GB eth – default settings

– Service Console + Ip Storage/vmotion + VMs/portgroups (vmnic0 active, vmnic1 active)

2.) vSwitch0 2x10GB eth – NIC Teaming/Failover Order – Seperate Service Console fr IP Storage

– vSwitch (vmnic0 active, vmnic1 active)

– Service Console (nic teaming: vmnic0 active, vmnic1 Standby)

– Ip Storage (nic teaming: vmnic1 active, vmnic0 Standby)

– vmotion

– vmkernel2 vmotion only

– VMs/portgroups

2a.) vSwitch0 2x10GB eth – NIC Teaming/Failover Order – Seperate Service Console #2

– vSwitch (vmnic0 active, vmnic1 standby)

– Service Console (vmnic1 active, vmnic1 standby)

– vmkernel1 – Ip Storage

– vmkernel2 vmotion only

– VMs/portgroups

2b.) Seperate Service Console from vmotion

3.) vSwitch0 2x10GB eth – NIC Teaming/Failover Order – Seperate IP Storage from All

– vSwitch (vmnic0 active, vmnic1 standby)

– Service Console

– vmkernel1 – Ip Storage (nic teaming: vmnic1 active, vmnic0 Standby)

– vmkernel2 vmotion only

– VMs/portgroups

4.) vSwitch0 2x10GB eth – NIC Teaming/Failover Order – Seperate IP Storage from All

– vSwitch (vmnic0 active, vmnic1 standby)

– Service Console

– vmkernel1 – Ip Storage (nic teaming: vmnic1 active, vmnic0 Standby)

– vmkernel2 vmotion only

– VMs/portgroups

which setup youl you prefer and why ?

Well…two pNICs is always a pain! When will HW vendors ever learn that you need MORE?!

With only two pNICs, you really have no choice other than to have a single vSwitch with both pNICs associated and active.

If your traffic volume is fairly low, just leave everything at the default – no reason to over-engineer.

Assuming you have enough traffic to warrant looking more closely:

First, I’d like to see everything on its own VLAN – including Service Console & IP Storage. This adds a minor bit of complexity to your installation process, but it’s easy enough to deal with.

I’d suggest separating the management traffic from the VM traffic, so Service Console with vmnic0 active, vmnic1 standby / VMs vmnic1 active, vmnic0 standby. You then have two “heavy hitter” users (IP Storage & VMotion). I’d suggest separating these two, also. My preference here is to put IP Storage with the Service Console. Why? Well, the Service Console is your least demanding consumer (unless you’re doing SC-based backups…) AND, from a security perspective, I feel it’s safer to put these two together. IP Storage network traffic is not encrypted, so having it on the management interface reduces the possibility of one of your VM consumers figuring out a way to “eavesdrop” using some current or future VLAN vulnerability.

I’d then put VMotion & VMs together with vmnic0 standby & vmnic1 active. While some will argue that you shouldn’t combine these two because VMotion could have a negative impact on VM traffic, I disagree. Yes, there will be a negative impact to VM network performance during a VMotion; however, you would also experience a performance hit by combining IP Storage & VMotion. It’s my position that you’re better off putting VMotion with the VMs because of the following:

– VMs will (typically) not use very much network bandwidth

– You want your VMotion to complete as quickly as possible

– IP Storage is going to consume significantly more bandwidth than “normal” VM traffic

– There is no free lunch! With only two pNICs, you can’t set things up like you’d really like them, so take the lesser of the two evils

HTH, and thanks for stopping by.

KLC

I guess the future direction is consolidating network connectivity into few, large bandwidth pipes (CNAs are an example) and here the NETIOC feature in the DVS comes into the picture in addition to vlan segregation

Yep – and consolidation does make sense in many ways. My only concern with this strategy is that there’s no capacity allocation for the isolation of management traffic, and not enough flexibility to carve up the pipes as you see fit. That’s one thing I do like about HP’s FlexConnect architecture – you can slice & dice to meet your needs. The problem is that, once you’ve carved up the pipe, there’s no allowance for a busy consumer in one “flex channel” (my term) to take advantage of unused capacity in another flex channel.

I’d at least like to see two GbE links that I could dedicate to management traffic in addition to the two “fat pipes” for everything else. If someone is concerned enough about the infrastructure costs, they could put everything on the two fat pipes & not populate the GbE links. Most of the customers I’ve dealt with aren’t going to sweat the additional costs – they’re more interested in isolation and manageability.

Thanks for the comment!

KLC

Can vmotion take adavantage of more than 1gb NIC? Our 4.1 VMware hosts have 12 NICs but I only have 2 nics assigned to VMotion Active/Passive. It takes two long to migrate all 40 VMs in a 5 hosts cluster when maintenance is needed. I would to add active nics to the vmotion switch to increase performance. We already increase the number of VMs allowed to migrate at once to 8. Will the host take advantage of additional NICs or all the trafic will go throught a single NIC. Thanks…

yes it can. you’ll double the vmotion speed by adding another gig pNic. tested that one already!

The load balancing algorithms have been significantly updated since I wrote these blogs. I don’t doubt that it can now take advantage of multiple pNICs – but it didn’t use to be able to…

How are the pNICs chosen when the VM has access to more than one PG and those PGs share the same pNICs? I have two servers 4 pNICs each so I’m thinking about one vSwitch with all 4 pNICs. Some VMs (like routers) have access to more than one PG. I will also use iSCSI with multipathing because I have SAN with 6 pNICs and want to use 4-5 of them for iSCSI.

Hi ,

I would like to tell you about a problem occured when we were trying to

configure a network load balancing (NLB) in vmware.

We were using the foundry switch (The switch is Brocade fastIron Edge GS648P

1-We had installed and configured NLB on windows server on vmware.

2- We had use multicast mode

After that, all the mac addresses of our vmware machines went to 00:00:00:00:00:00.

We are trying to find the reasons but every thing seem to be well configured.

Do you think that it should be at the foundry switch level?

Did you already have this kind of problem in the past?

if yes, what options should be the solution?

Thank you

Hi Ken!

thanks for doing all the work to simplify the issue(s), but not too much. I’m coming late to the game here, with a different focus than you probably consider.

We work with small businesses, which have very small budgets. I am virtualizing a rat’s nest of servers into an esxi 4.1system for one of my clients. I have a freeNAS box hosting the storage for the esxi 4.1 box (which is essentially diskless). The network infrastructure is GbE. The freeNAS box provides RAIDz and replication (both local and off-site). VM backups occur with ghettoVCB. The freeNAS box does a great job of link aggregation with LACP to the D-Link switch, giving us 3Gbps. The bottleneck is the 1GBPS NIC in the ESXi box.

From what I understand, there is nothing I can do about this (the FreeNAS box has only one IP address).

Do I understand correctly?

On another issue, I assume it would it benefit the workstations in the LAN connecting to the VMs if we had an additional NIC for them to use? If so, what would be the best way to configure this — would we make a EST VLAN for the IP storage and 1 ESXi pNIC and a second VLAN for the workstations and a second ESXi pNIC.

or would port-based load balancing distribute the traffic?

Thanks in advance for any (more) help!

Ken, hope you can help with a question:

If i have 1 pnic attached to 1 vswitch (and the vswitch is configured to usevSwitch Port Based Load Balancing) and a dozen VMs attached to the vswitch – obviously they’re all talking out 1 pnic.

If i then add additional pNICs to the vswitch, do the vm’s automatically balance across those nic’s? or does this not occur until the vm’s are power cycled?

I’ve got 4 pnic’s added to a vswitch and only 1 ever gets traffic … but all the vm’s on the vswitch never get restarted as they’re production server vm’s. i suspect 1 pnic was attached to the vswitch — all the server vms were added then the rest of the pnics were added.

Until i read your articles i’d been looking into using ip hash (its not that hard to create a static trunk on a switch) but if i can get away without having to do it, just by restarting some server vm’s… i’d probably do that.

What do you think?

Thanks

Salmaan

I’d suggest you try disabling the one pNIC that has all the VMs associated with it. That will force them to fail over to another pNIC without requiring you to reboot your servers. Probably miss a couple packets during the failover, but TCP should take care of that for you.

You could also just disconnect & reconnect the pNICs – although that would likely incur more downtime than just disabling the one pNIC (and would require a lot more manual manipulations!)

Ken, first of all great articles about vSphere networking. About load balancing and the number of pnics, when you have have 4 pnics would you add them in 1 load balancing vSwitch (active/active) with management, vMotion, IP storage and VM traffic seperated by using vlans?

Or would you create 2 vSwitches using active/standby failover?

Regards, Berend

Hi Berend,

Thanks for stopping by!

As to your question – I would go with a single vSwitch with functions separated by port groups (VLANs). VMware is very good at doing the load balancing and traffic management. I see no reason to cut your available bandwidth in half by going with an active/standby configuration. Kind of leads back to one of my basic design principles: Unless there’s a really good reason, don’t change a default behavior.

Best of luck!

KLC

Great Job! Enjoyed reading very much as easy to understand and makes perfect sense! Question: I am using a single switch with two uplink adapters for multiple heartbeat networks (two vMkernel ports), I understand why we set up active/passive but do not understand why we want to disable “do not failback”.

I’m new to VMware-stuff and may be back in 2009 load balancing in vSwitch was working different, but…

> keep in mind is that all of these load balancing policies we’re going to discuss here affect only traffic that is outbound from your ESX host

now is incorrect when speaking about “MAC based” or “Port based” balancing. Just after LB algorithm has selected pNic where VM traffic will be egressed, physical switch will learn VM’s MAC for only that port and will forward all incoming for that VM (MAC) traffic in learned port. When “IP based” LB is used, you need to configure LAG on the pSwitch for two pNICs used for VM-traffic, otherwise pSwitch will go crazy as VM’s MAC will be visible/active on more than one port.

VMware ESXi 5.5 has a BUNCH of new load balancing algorythims. So let’s see how these can be used and if we can finally get NFS to use all those 8 gigabit ports we have in an etherchannel! LOL VMware is too far behind in the NFS world of versions, what are they up to 5 with RDMA and VMware is stuck at 3? I call them out as NFS haters at EMC! LOL

Awesome discussion..learnt a lot.. Thanks Ken

Thanks for the write up, very informative. I was wondering what you would do in my scenario especially as I have chose the one option you say is interesting but worthless. That being explicit failover.

I have the slight issue that some of our esx hosts at one datacenter are connected to both a 1Gps switch and a 100Mbps (the latter is there for redundancy of last resort only due to budget constraints). Is this not the only valid load balance option if you have mixed speed physical NICS (due to switch constraints)

Would like to know your opinion

Kind Regards

Adrian

Hi Adrian, apologies for the delayed response (hey, it’s only been 30 months!) … yeah, I think you have stumbled on a use case that explicit failover actually makes sense. Let us know how it’s worked out over the past 2+ years 🙂

Wow – what an AMAZING article. Even though I didn’t understand it the first few times through, I had a sense it was something VERY important so I persisted (not a blight on the article but on my inexperience)

Am so glad I did. When the penny dropped it was a revelation. I now know why my SAN performance is so woeful – it was setup by our external vendor usin IP Hash and my SAN presents…. yup… a single Virtual IP….. DOH!

I also hadn’t realised that VMWare was just a round robin type deal between VM and pNIC – had assumed the 3 x 1Gbps network leads into the SAN were giving us a 3Gbs pipe.

At least I can stop chasing a silver bullet to fix this prob – I now have the ammo (pardon the clichés) to go hounding the boss for 10Gb links into the (single vIP SAN)

Thank you for putting in the effort to share this info with your peers

Simon Trangmar

Adelaide / Oz – Land Down Under

A little late on the response here, but glad that this article was helpful! It’s getting long in the tooth (cliche!), but most of it is still relevant. Best of luck Down Under 🙂